Build Your Own Real-Time AI Voice Assistant with Live Transcript using N8N and OpenAI real-time API

The dream of having a fluid, real-time conversation with an AI – like something out of science fiction is closer than ever. Forget clunky text interfaces or waiting for delayed audio responses. What if you could build your own voice assistant that not only talks back instantly but also shows you a live transcript of the conversation?

Thanks to the power of low-code automation platforms like N8N and cutting-edge APIs like OpenAI's Realtime endpoint, this isn't just possible; it's surprisingly straightforward!

I recently put together an N8N workflow that achieves exactly this, and I want to share how it works. It combines the flexibility of N8N with the low-latency capabilities of OpenAI's latest models (gpt-4o-realtime-preview) to create a seamless voice interaction experience right in your browser.

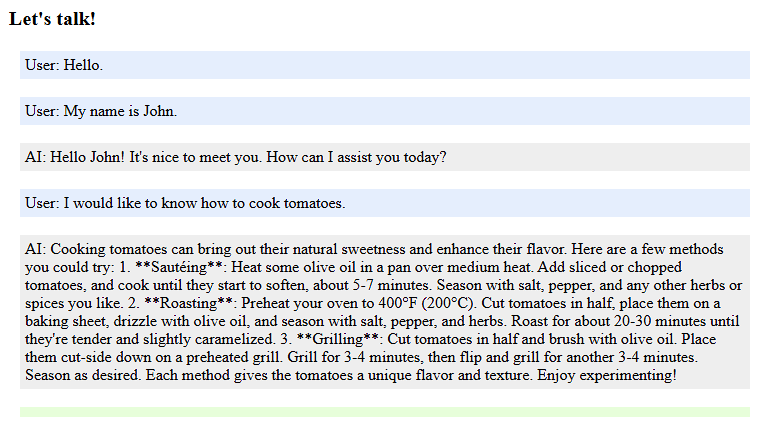

The Goal: Create a web-based interface where a user can speak to an AI, hear its response almost instantly, and see both their own words and the AI's words transcribed live on screen.

The Tools:

- N8N: Our low-code workflow automation platform. It acts as the orchestrator, connecting the web request to the AI service.

- OpenAI Realtime API: The star player, providing the AI model (gpt-4o-realtime-preview), voice synthesis (alloy), audio transcription (whisper-1), and the real-time session management.

- Web Browser (with JavaScript): The user interface, handling microphone input, audio output, and the real-time communication (WebRTC) with OpenAI.

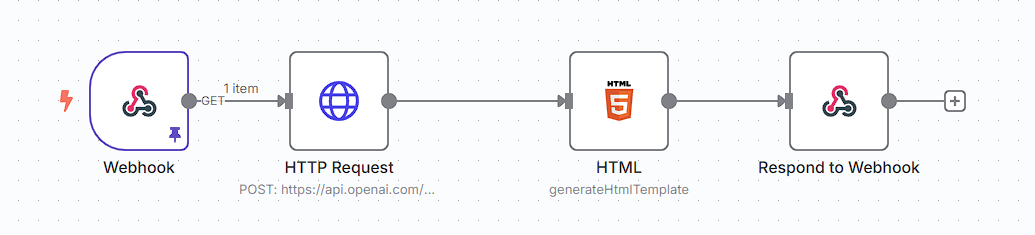

Dissecting the N8N Workflow:

The N8N workflow itself is elegantly simple, consisting of just four nodes:

- Webhook Node:

- Purpose: This is the entry point. It listens for incoming HTTP requests. When you navigate to the webhook's URL in your browser, the workflow starts.

- Configuration: Set to respond using a responseNode (specifically, the "Respond to Webhook" node later). Path is set to something memorable like realtime-ai.

- HTTP Request Node (OpenAI Session Setup):

- Purpose: This is where the magic begins on the backend. It contacts the OpenAI API (/v1/realtime/sessions) to initiate a new real-time session.

- Configuration:

- Method: POST

- URL: https://api.openai.com/v1/realtime/sessions

- Authentication: Uses your OpenAI API credentials stored securely in N8N.

- Body: A JSON payload defining the session parameters:

- instructions: Sets the AI's persona (e.g., "You are a friendly assistant.").

- model: Specifies the real-time capable model (e.g., gpt-4o-realtime-preview-2024-12-17).

- modalities: Enables audio and text.

- voice: Chooses the text-to-speech voice (e.g., alloy).

- input_audio_transcription: Configures Whisper for transcribing user input.

- Output: Critically, OpenAI responds with a client_secret. This is an ephemeral key that the browser will use to connect directly to this specific AI session via WebRTC.

- HTML Node:

- Configuration: Contains the HTML document structure, basic CSS for displaying messages, and the crucial JavaScript code.

- JavaScript Breakdown:

- init() function: This is the core client-side logic.

- Get Ephemeral Key: It retrieves the client_secret generated by the previous HTTP Request node (using N8N's expression {{ $json.client_secret.value }}).

- WebRTC Setup: Creates an RTCPeerConnection, the browser technology for real-time audio/video streaming.

- Microphone Access: Uses navigator.mediaDevices.getUserMedia to get audio input from the user's microphone and adds it to the connection.

- Audio Output: Sets up an <audio> element to play the incoming audio stream from OpenAI.

- Data Channel: Creates an RTCDataChannel named "oai-events". This is how text-based events (like transcription updates) are sent from OpenAI back to the browser.

- SDP Handshake: Performs the Session Description Protocol (SDP) offer/answer exchange with the OpenAI Realtime API endpoint (/v1/realtime?model=...) using the ephemeral key for authorization. This establishes the direct connection.

- handleResponse(e) function: Listens for messages on the data channel. It parses the incoming JSON events from OpenAI.

- response.audio_transcript.delta: Appends partial AI transcript chunks to the "current message" area.

- response.audio_transcript.done: Moves the completed AI transcript to the main message list.

- conversation.item.input_audio_transcription.delta: Appends partial user transcript chunks.

- conversation.item.input_audio_transcription.completed: Moves the completed user transcript to the main message list.

- Respond to Webhook Node:

- Purpose: Takes the HTML generated by the previous node and sends it back to the user's browser as the response to the initial webhook request.

- Configuration: Set to respond with the text/HTML content from the HTML node (={{ $json.html }}).

Purpose:

This node generates the HTML page that will be displayed in the user's browser. It contains the structure (HTML), styling (CSS), and the client-side logic (JavaScript).

How it Feels:

When you open the webhook URL:

- N8N runs the workflow.

- It asks OpenAI to create a real-time session.

- It generates an HTML page containing the necessary JavaScript and the unique client_secret.

- This page is sent to your browser.

- The JavaScript in your browser asks for microphone permission.

- It uses the client_secret to establish a direct WebRTC connection with the OpenAI session.

- As you speak, your audio is streamed to OpenAI.

- OpenAI streams back audio responses AND sends text events (deltas and completed transcripts) over the data channel.

- The JavaScript updates the HTML page dynamically, showing the conversation transcript unfold in real-time!

Why is this cool?

- Low Latency: Interaction feels natural and immediate.

- Live Transcription: Visual feedback enhances understanding and accessibility.

- Low-Code Power: N8N handles the backend orchestration, API calls, and credential management with minimal code.

- Browser-Based: No complex client software needed, just a modern web browser.

- Cutting-Edge AI: Leverages OpenAI's powerful real-time models.

This workflow demonstrates the incredible potential of combining modern AI services with accessible automation tools like N8N. It opens the door for building sophisticated, interactive voice applications – from custom assistants and accessibility tools to novel customer service interfaces – faster and more easily than ever before.

Feel free to adapt this workflow and explore the possibilities! Happy building!

You can download the full workflow here:

Thanks for reading ❤️

Thank you so much for reading and do check out the N8N tutorials to learn more about N8N. Click the button below to create your service on Elestio. See you in the next one👋