Caching with Redis for Backend in Apache Superset

Self-hosting Apache Superset and Redis on Elestio provides a foundation for creating interactive dashboards with optimized performance. One way to improve performance is by configuring Redis as the caching backend for Superset. This guide walks through the process of integrating Redis with Superset, highlights common pitfalls, and explains how to verify the setup to ensure everything is working as expected.

Why Use Redis as a Caching Backend?

Redis is a high-performance in-memory data structure store, often used as a database, cache, and message broker. In the context of Superset, Redis can significantly speed up dashboard queries by caching query results and reducing the load on your database. Moreover, Redis is also used for task scheduling and backend message handling when Celery is configured for Superset.

Hosting Superset and Redis on Elestio

Both Superset and Redis need to be set up on Elestio. Ensure that Redis is properly installed and running. You should have access to the Redis host, port, password, and optionally, database numbers for caching and Celery. Similarly, Superset should be up and running with its dependencies configured.

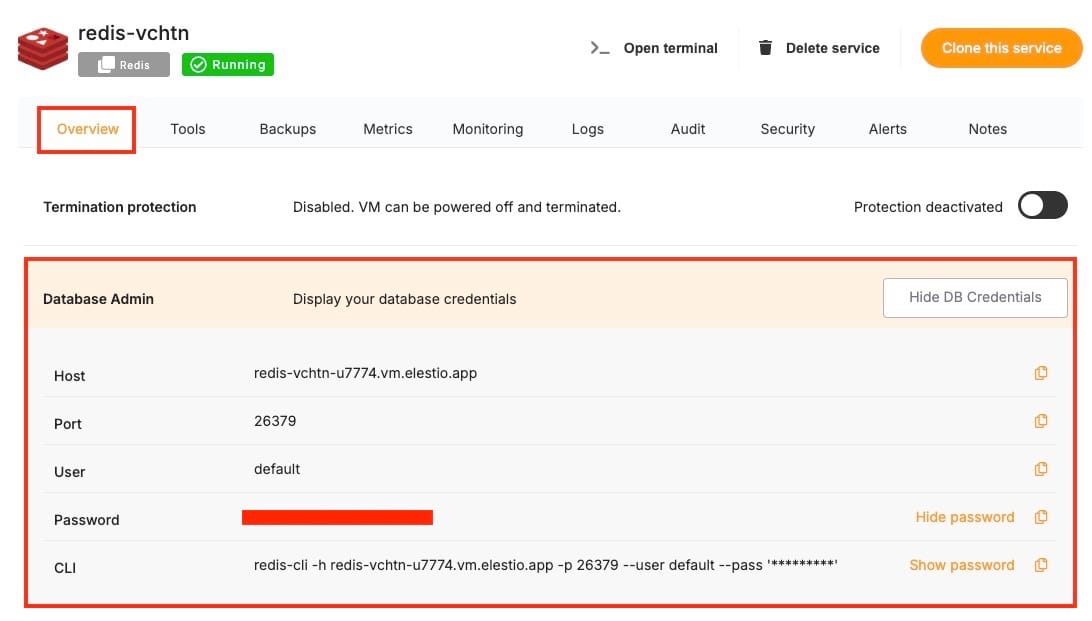

For Redis on Elestio:

- Log in to your Elestio dashboard and create a new Redis service.

- Note down the connection details (host, port, and password).

Configuring Superset for Redis Caching

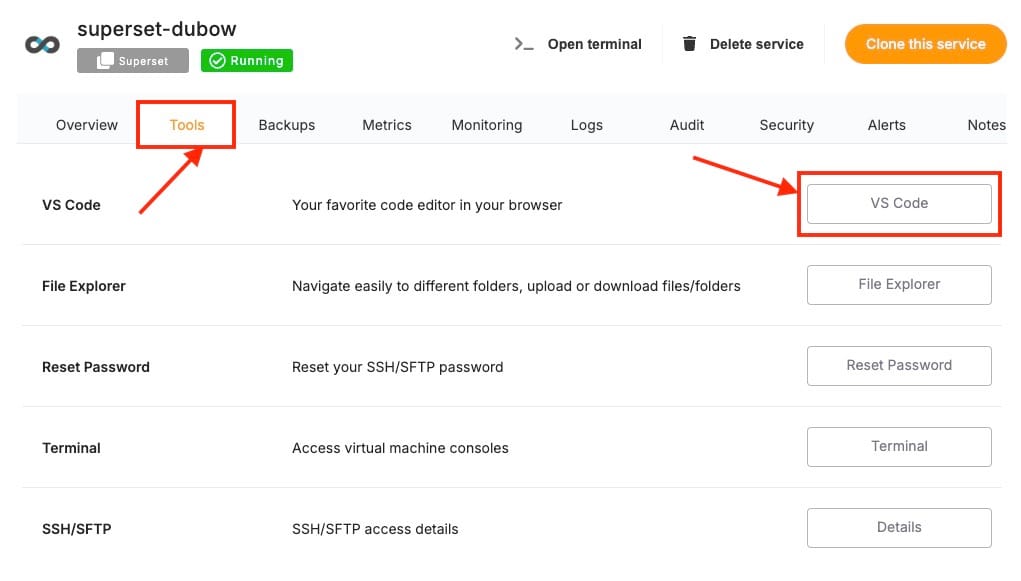

On your Elestio dashboard head over to the Tools section and access the VS Code there. We will be making all the required updates in the VS Code instance.

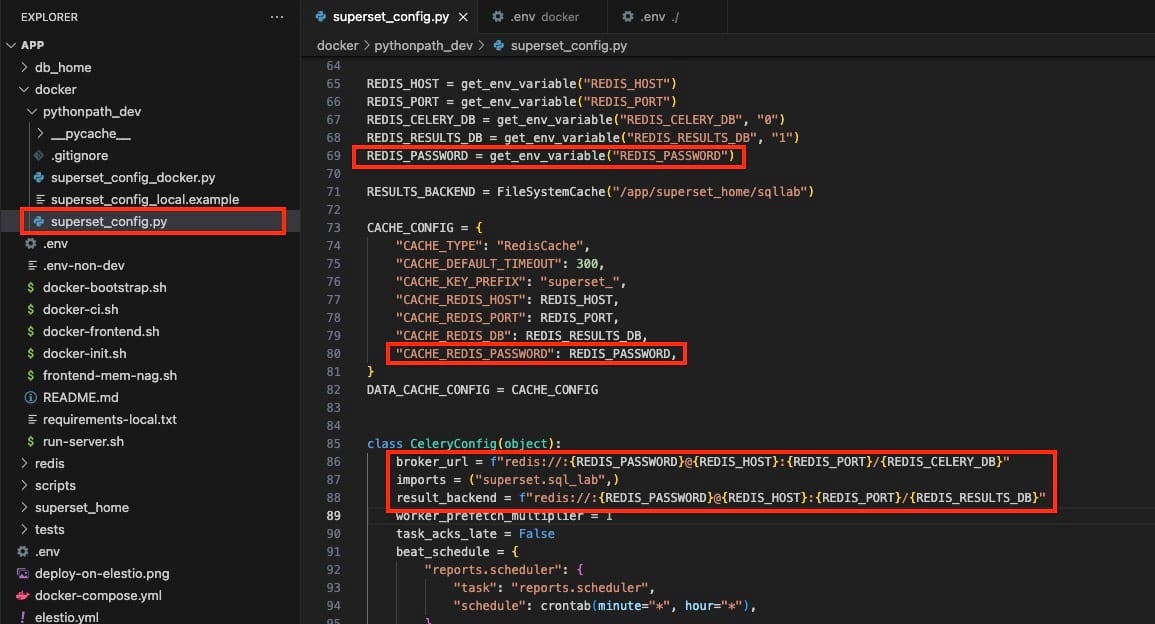

Locate your Superset configuration file (commonly superset_config.py) under docker > pythonpath_dev and add Redis details for both caching and Celery task scheduling. In the file add the following code in the places shown like in the image.

REDIS_PASSWORD = get_env_variable("REDIS_PASSWORD")Configure Celery

Celery enables task scheduling and background execution. Update the Celery configuration in superset_config.py:

broker_url = f"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}/{REDIS_CELERY_DB}"

imports = ("superset.sql_lab",)

result_backend = f"redis://:{REDIS_PASSWORD}@{REDIS_HOST}:{REDIS_PORT}/{REDIS_RESULTS_DB}"Configure Caching:

Use Redis for query caching by adding the following configuration to superset_config.py:

CACHE_CONFIG = {

"CACHE_TYPE": "RedisCache",

"CACHE_DEFAULT_TIMEOUT": 300,

"CACHE_KEY_PREFIX": "superset_",

"CACHE_REDIS_HOST": REDIS_HOST,

"CACHE_REDIS_PORT": REDIS_PORT,

"CACHE_REDIS_DB": REDIS_RESULTS_DB,

"CACHE_REDIS_PASSWORD": REDIS_PASSWORD,

}Define Redis Environment Variables:

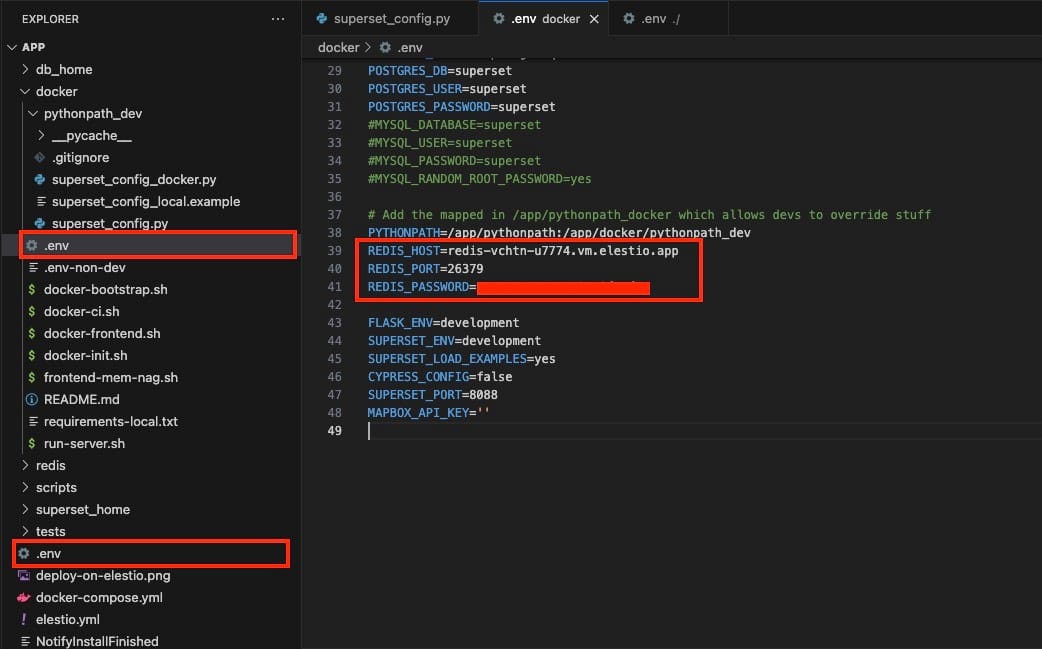

If you're using Docker, include the following environment variables in your .env file, these files are mounted in your docker-compose file. Be sure to make these changes in both the env files.

After updating the configuration, restart your Superset services:

docker-compose down && docker-compose up -d

Verifying Redis Connectivity

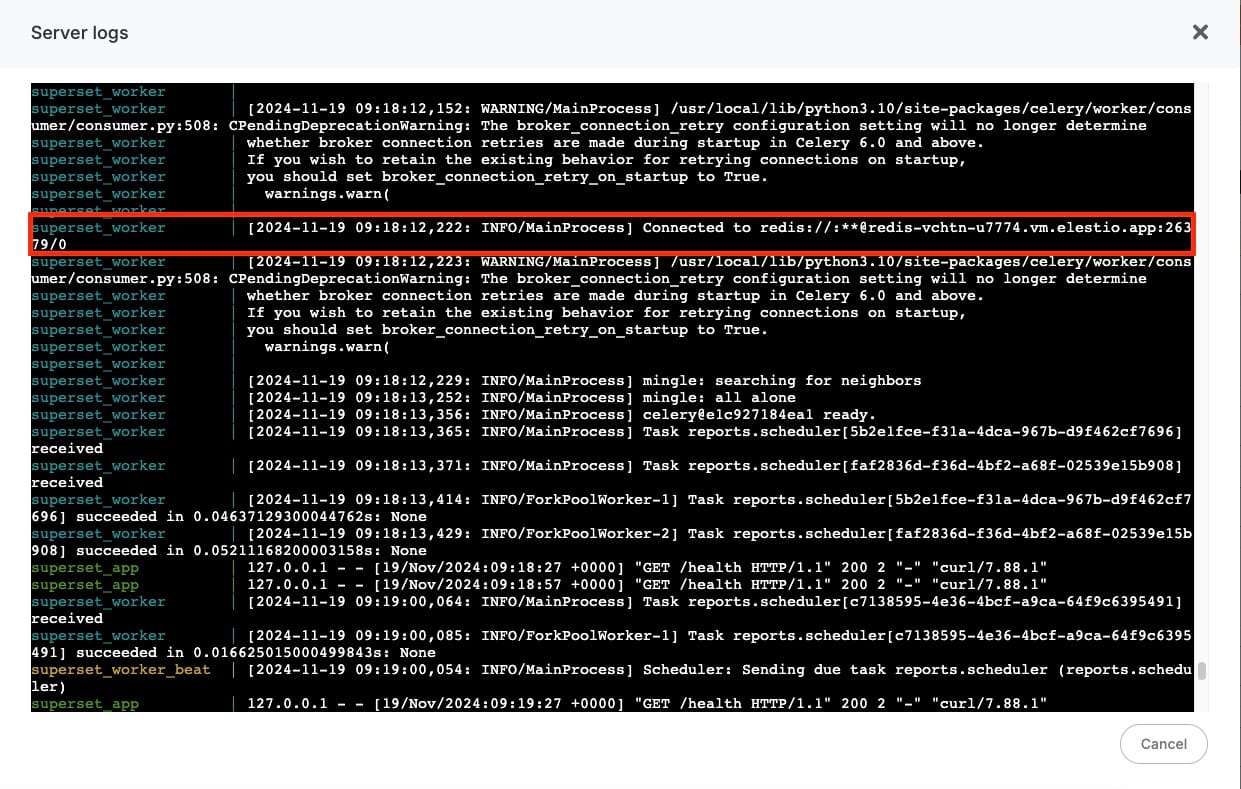

Check Superset Logs:

Access the Superset dashboard and find the View app logs under the Software section. Here you can see worker logs that state the success of the connection.

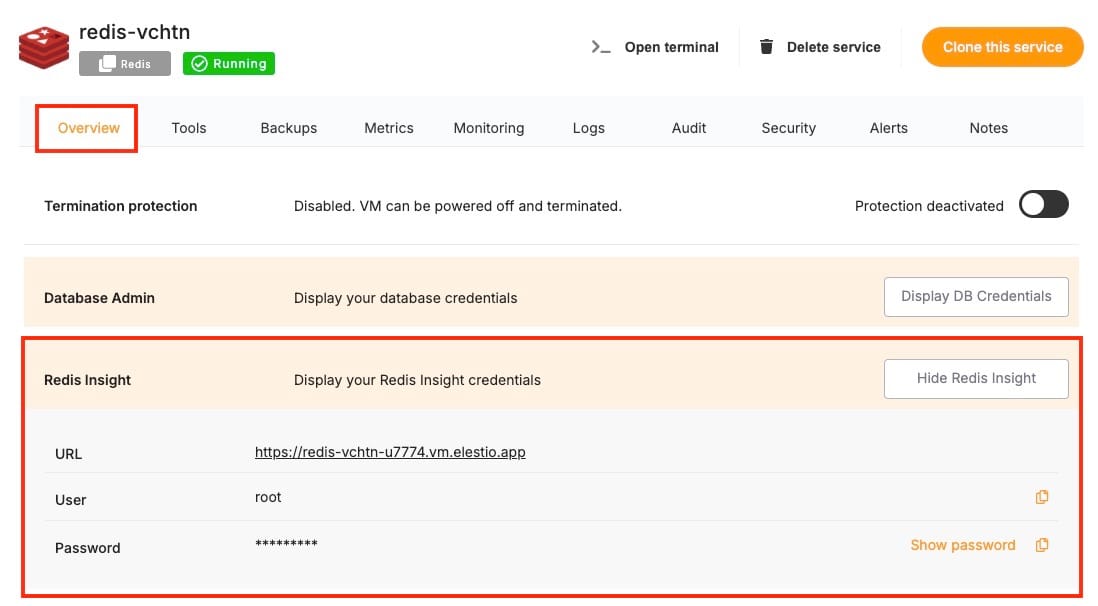

Redis Insights:

Under the Redis service dashboard, you can access Redis Insights. Use the credentials provided to access the Redis Insights

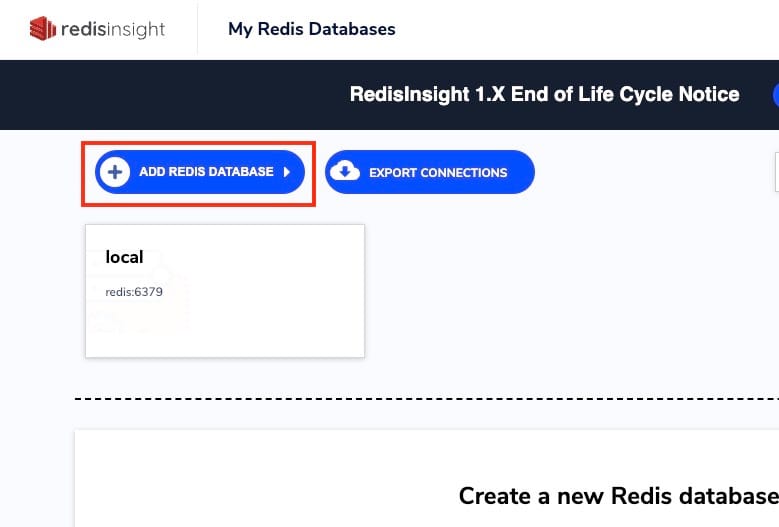

Once you are logged in, you can add your Redis Database by clicking on Add Redis Database and providing the details from the service dashboard. Here you will be able to see different statistics and usage to confirm the success of the connection.

Debugging Common Issues

If you encounter issues such as "invalid username-password pair" or "authentication required," double-check the following:

- Ensure the

broker_urlandresult_backendin CeleryConfig are correctly formatted:redis://:<password>@<host>:<port>/<db>. - Verify that the Redis password is correct and that Redis is configured to allow password-protected access.

Thanks for reading ❤️

Integrating Redis as a caching backend in Apache Superset enhances the platform's responsiveness, especially for dashboards with heavy queries or frequent updates. By following this guide, you can ensure a seamless integration and enjoy the benefits of optimized performance in your analytics workflows.