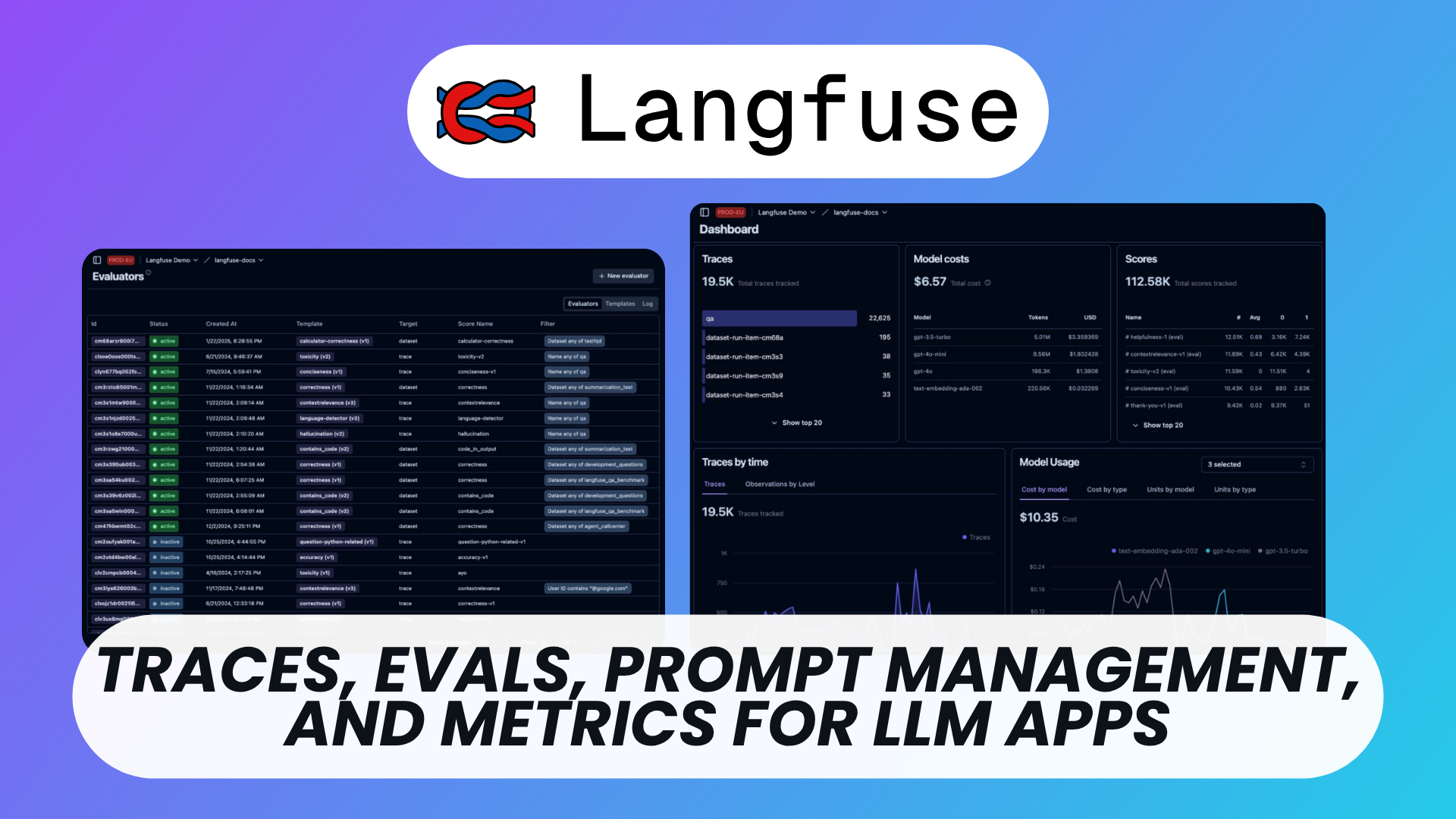

Langfuse: Free Open Source LLM Engineering Platform

As teams race to integrate large language models (LLMs) into their applications, a major challenge arises: how do you observe, debug, and improve these models in production? That’s where Langfuse steps in.

Langfuse is a free, open-source platform designed to empower LLM engineering with end-to-end observability, prompt management, testing, and debugging—all from a single place.

Whether you’re building with OpenAI, Anthropic, or open models like LLaMA or Mistral, Langfuse gives you a structured, transparent, and collaborative interface for shipping reliable AI features faster.

Watch our platform overview on our YouTube channel

Organization & Permissions

Langfuse supports team collaboration out of the box. You can structure your work into organizations and projects, assign roles, and control access through granular permission settings.

This is ideal for startups and larger AI teams alike, allowing them to manage multiple products and environments under one roof while keeping sensitive prompt iterations or datasets secure.

Project Integration and SDKs

Langfuse offers SDKs for multiple languages including TypeScript/JavaScript, Python, and Go, making it simple to integrate into existing LLM pipelines.

It’s also compatible with common tools in the LLM ecosystem like LangChain, OpenAI SDK, and LlamaIndex, enabling fast drop-in instrumentation with just a few lines of code.

Whether you’re logging individual generations, agents, or multi-step chains, Langfuse makes it easy to trace and monitor your application's LLM behavior.

Observability for OpenAI SDK

If you're using the OpenAI SDK, Langfuse automatically captures detailed metadata such as:

- Model type

- Token usage

- Latency

- Cost estimates

- API responses and errors

This level of observability is essential for cost tracking, debugging prompt failures, or performance regressions, giving you an in-depth look at how your application interacts with LLMs in production.

Dashboard & Metrics

The Langfuse dashboard is your mission control center. You get:

- Global metrics on token consumption, latency, and success/failure rates

- Interactive filters to drill down by project, environment, or user session

- Custom dashboards to track business-specific KPIs tied to LLM responses

This centralized view helps teams identify trends, anomalies, and usage patterns without diving into raw logs.

Tracing & Sessions

Langfuse provides fine-grained tracing for every LLM request or chain. You can:

- Inspect inputs, outputs, metadata, and intermediate steps

- Group related traces into sessions (e.g., a user's chat interaction)

- Analyze nested generations, tool calls, or agent behavior visually

This makes Langfuse extremely useful for debugging complex workflows, like chatbots, search pipelines, or multi-agent systems.

Annotations & Comments

To support team-based prompt development, Langfuse enables in-line annotations and comments on traces.

You can mark faulty responses, highlight edge cases, or leave notes for other team members. This turns Langfuse into a collaborative workspace for prompt engineering, QA, and product feedback loops.

Prompt Creation with Version Control

Langfuse includes a built-in prompt editor with version history, change diffs, and rollback support.

You can:

- Test prompts live against models

- Compare versions side by side

- Tag prompts for specific use-cases or AB tests

This eliminates the chaos of managing prompts in spreadsheets or Notion docs and gives teams a structured way to evolve prompts safely.

Testing with Datasets

Once you have prompts, you can test them at scale with Langfuse Datasets.

Each dataset lets you:

- Evaluate prompts against consistent inputs

- Compare model outputs across prompt versions

- Track pass/fail metrics, scores, or custom validators

This is essential for regression testing, fine-tuning evaluations, and pre-launch validations, especially when deploying LLMs in critical applications like healthcare, finance, or education.

Conclusion

Langfuse brings much-needed structure, transparency, and engineering discipline to the fast-moving world of LLMs. With its open-source foundation and flexible SDKs, it's the perfect companion for teams building with large language models at scale.

From real-time tracing to prompt versioning, dataset-based testing, and deep observability, Langfuse transforms your AI stack into a production-grade system you can trust.

If you're serious about shipping LLM-powered features, Langfuse is your all-in-one control tower.