RabbitMQ + Node.js: Build Resilient Event-Driven Microservices with Dead Letter Queues

Message processing fails. It happens. A downstream API times out, a database connection drops, or your code hits an edge case nobody anticipated. The question is: what happens to that message?

Without proper error handling, failed messages either disappear forever or block your entire queue. Dead Letter Queues solve this by giving failed messages a second chance while keeping your main queue moving.

The Setup

You need RabbitMQ running and the amqplib package installed:

npm install amqplib

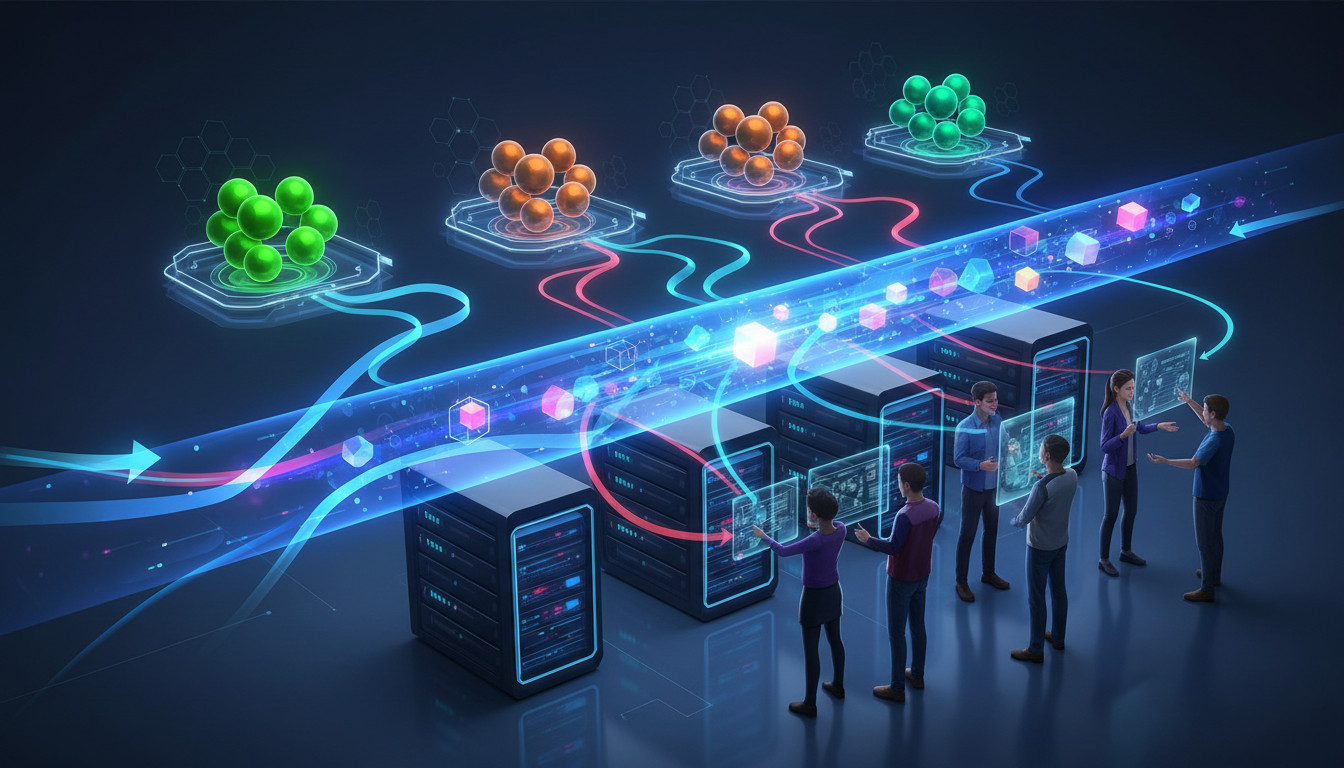

We'll create three queues: a main processing queue, a retry queue with a delay, and a dead letter queue for messages that exhaust their retries.

Queue Architecture

Here's the flow: messages enter the main queue, get processed, and if they fail, move to a retry queue. After a delay, they return to the main queue for another attempt. After three failures, they land in the dead letter queue for manual inspection.

const amqp = require('amqplib');

const MAIN_QUEUE = 'orders';

const RETRY_QUEUE = 'orders.retry';

const DLQ = 'orders.dlq';

const MAX_RETRIES = 3;

const RETRY_DELAY = 30000; // 30 seconds

async function setupQueues(channel) {

// Dead letter queue - final destination for failed messages

await channel.assertQueue(DLQ, { durable: true });

// Retry queue - messages wait here before returning to main queue

await channel.assertQueue(RETRY_QUEUE, {

durable: true,

arguments: {

'x-dead-letter-exchange': '',

'x-dead-letter-routing-key': MAIN_QUEUE,

'x-message-ttl': RETRY_DELAY

}

});

// Main processing queue

await channel.assertQueue(MAIN_QUEUE, {

durable: true,

arguments: {

'x-dead-letter-exchange': '',

'x-dead-letter-routing-key': DLQ

}

});

}

The x-dead-letter-exchange and x-dead-letter-routing-key arguments tell RabbitMQ where to route rejected messages. The x-message-ttl on the retry queue creates our delay before messages bounce back.

The Consumer

Here's a production-ready consumer that tracks retry counts and handles failures gracefully:

async function startConsumer() {

const connection = await amqp.connect('amqp://localhost');

const channel = await connection.createChannel();

await setupQueues(channel);

// Process one message at a time

channel.prefetch(1);

channel.consume(MAIN_QUEUE, async (msg) => {

if (!msg) return;

const headers = msg.properties.headers || {};

const retryCount = headers['x-retry-count'] || 0;

try {

const order = JSON.parse(msg.content.toString());

await processOrder(order);

channel.ack(msg);

console.log(`Order ${order.id} processed successfully`);

} catch (error) {

console.error(`Processing failed: ${error.message}`);

if (retryCount >= MAX_RETRIES) {

// Max retries exceeded - send to DLQ

channel.sendToQueue(DLQ, msg.content, {

persistent: true,

headers: { ...headers, 'x-error': error.message }

});

channel.ack(msg);

console.log(`Order moved to DLQ after ${MAX_RETRIES} retries`);

} else {

// Schedule retry

channel.sendToQueue(RETRY_QUEUE, msg.content, {

persistent: true,

headers: { ...headers, 'x-retry-count': retryCount + 1 }

});

channel.ack(msg);

console.log(`Order scheduled for retry ${retryCount + 1}/${MAX_RETRIES}`);

}

}

});

}

Notice we always ack the message, even on failure. We manually route failed messages rather than using nack with requeue, giving us control over the retry count and delay timing.

The Publisher

Sending messages is straightforward:

async function publishOrder(order) {

const connection = await amqp.connect('amqp://localhost');

const channel = await connection.createChannel();

await setupQueues(channel);

channel.sendToQueue(MAIN_QUEUE, Buffer.from(JSON.stringify(order)), {

persistent: true,

headers: { 'x-retry-count': 0 }

});

console.log(`Order ${order.id} published`);

await channel.close();

await connection.close();

}

The persistent: true flag ensures messages survive RabbitMQ restarts.

Monitoring Your Queues

Add a simple health check to monitor queue depths:

async function getQueueStats() {

const connection = await amqp.connect('amqp://localhost');

const channel = await connection.createChannel();

const main = await channel.checkQueue(MAIN_QUEUE);

const retry = await channel.checkQueue(RETRY_QUEUE);

const dlq = await channel.checkQueue(DLQ);

await connection.close();

return {

pending: main.messageCount,

retrying: retry.messageCount,

failed: dlq.messageCount

};

}

Set up alerts when failed exceeds a threshold. A growing DLQ means something needs attention.

DLQ Processing

Messages in the dead letter queue need manual handling. Here's a utility to inspect and reprocess them:

async function reprocessDLQ() {

const connection = await amqp.connect('amqp://localhost');

const channel = await connection.createChannel();

const msg = await channel.get(DLQ);

if (!msg) {

console.log('DLQ is empty');

return;

}

const order = JSON.parse(msg.content.toString());

console.log('Failed order:', order);

console.log('Error:', msg.properties.headers['x-error']);

// Option 1: Requeue to main queue

channel.sendToQueue(MAIN_QUEUE, msg.content, {

persistent: true,

headers: { 'x-retry-count': 0 }

});

channel.ack(msg);

await connection.close();

}

Troubleshooting

Messages stuck in retry queue: Check that x-message-ttl is set correctly. Messages won't move until the TTL expires.

Consumer stops processing: Your processOrder function might be throwing unhandled promise rejections. Wrap async operations in try-catch.

DLQ growing rapidly: Either your processing logic has a bug or a downstream service is consistently failing. Check the x-error header for patterns.

Connection drops randomly: Enable heartbeats in your connection options: amqp.connect('amqp://localhost?heartbeat=60').

Messages processed twice: Ensure your processOrder function is idempotent. Use order IDs to detect and skip duplicates.

Deploy RabbitMQ on Elestio

Setting up RabbitMQ with proper clustering and management UI takes time. Deploy RabbitMQ on Elestio and get a production-ready instance with the management plugin enabled, automated backups, and monitoring built in.

Thanks for reading!