RAGFlow: Free Open Source Generative AI Platform

The rise of generative AI has created a growing need for tools that combine large language models (LLMs) with private or organizational knowledge in a flexible and cost-effective way. While proprietary platforms dominate the market, open-source alternatives are catching up — offering developers more control, transparency, and extensibility.

RAGFlow is one of the most promising free, open-source platforms in this space. Built around Retrieval-Augmented Generation (RAG) workflows, RAGFlow enables you to create AI assistants and agents that can reason, retrieve, and respond using both LLMs and your custom data.

For building internal tools, automating tasks, or deploying chatbots, RAGFlow provides an accessible and powerful foundation.

Watch our platform overview on our YouTube channel

Let’s break down its key features.

Model Providers

RAGFlow supports multiple model providers, making it easy to integrate with:

- 🧠 Popular models: OpenAI (GPT-4), DeepSeek, Ollama, ...

- 🖥️ Local Models (via Ollama): Run open-source models such as LLaMA, Mistral, or Mixtral directly on your hardware.

- 🔌 Custom APIs: RAGFlow is modular, so you can plug in any language model that follows a simple API format.

This flexibility allows developers to test different providers and balance performance, cost, and privacy based on their use case.

Knowledge Base

At the heart of any RAG-based system is the knowledge base — and RAGFlow delivers a simple yet effective approach:

- 📄 Document Uploads: Easily ingest PDFs, TXT, DOCX, Markdown, and web pages.

- 🗃️ Vector Stores: Your documents are chunked, embedded, and stored in a vector database (e.g., Qdrant, Weaviate, or Chroma).

- 🔍 Fast Retrieval: When users ask a question, relevant chunks are retrieved and fed into the LLM to ground the answer in your data.

This RAG setup allows for highly accurate responses, even with domain-specific content — a key benefit for teams needing internal knowledge bots.

AI Chatbot Assistants

RAGFlow includes a built-in UI to create and test chatbot assistants. These assistants can:

- ✍️ Use system prompts to define behavior and tone.

- 🧠 Tap into your knowledge base for RAG-based answers.

- 🌐 Connect to multiple model providers.

- 🔐 Be hosted publicly or used privately via an API.

You can customize assistant personalities and capabilities — perfect for building internal support bots, documentation guides, or sales chat assistants.

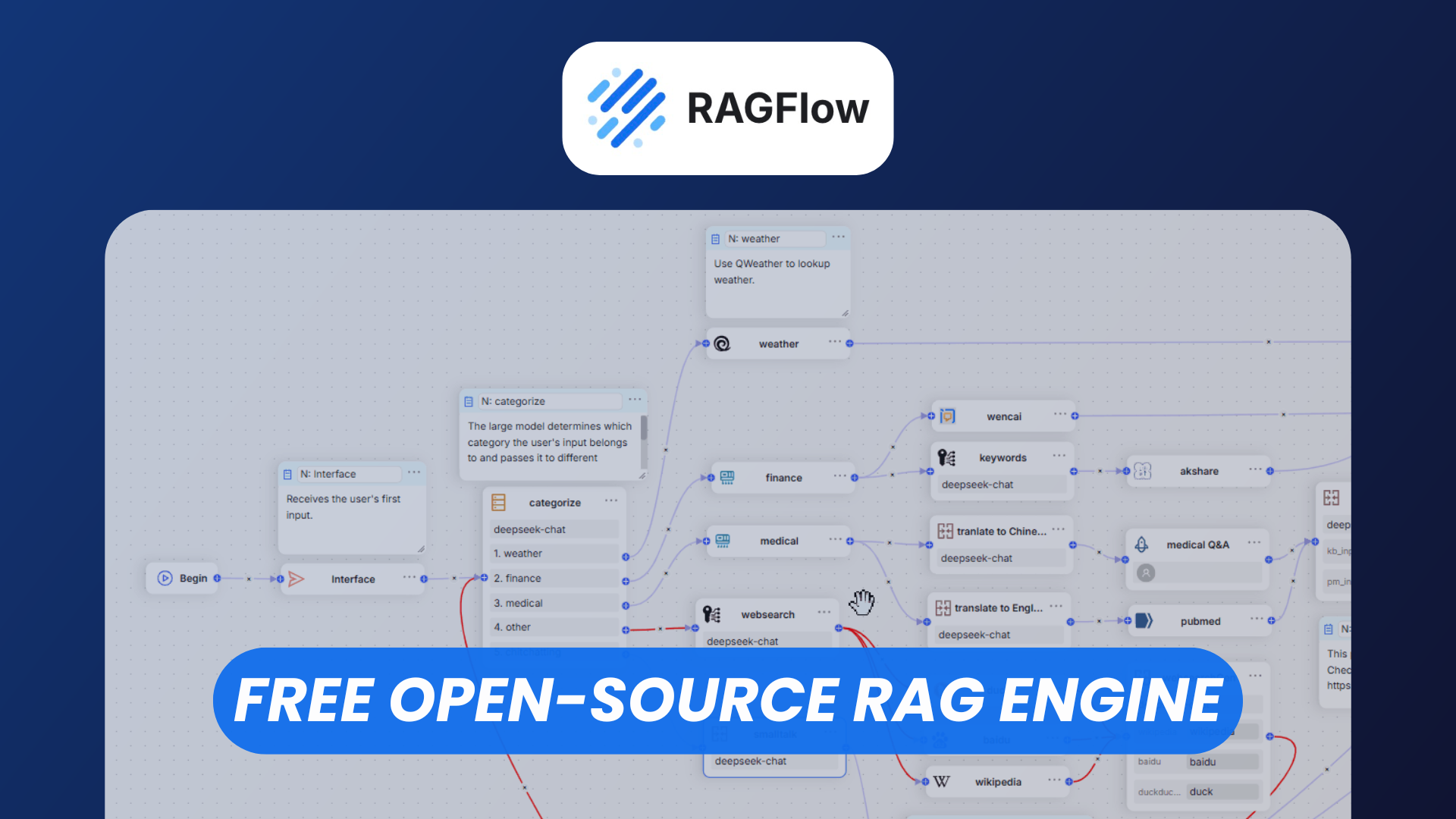

AI Agents Node Editor

One of RAGFlow’s standout features is its Node Editor for AI agents.

- 🧩 Visual Workflows: Use a drag-and-drop interface to chain together nodes like LLM prompts, tools, knowledge bases, conditionals, and API calls.

- 🤖 Agents with Memory & Tools: Build autonomous agents that can reason, retrieve data, call APIs, and iterate over tasks.

- 🔧 Custom Tooling: Create your own plugins to expand what agents can do (e.g., database queries, file editing, or Slack messaging).

- 🎯 Targeted Automation: Agents are ideal for handling repetitive knowledge work, automating research tasks, or running multi-step flows.

This feature makes RAGFlow much more than a chatbot platform — it becomes a foundation for building AI-driven workflows and assistants tailored to your business logic.

Conclusion

If you're looking for a self-hosted, open-source alternative to expensive proprietary LLM platforms, RAGFlow is a compelling choice. It combines:

- ✅ Support for both cloud and local models

- ✅ Retrieval-augmented generation with vector search

- ✅ A clean assistant interface for chatbots

- ✅ A powerful agent workflow editor

For building AI copilots, customer support bots, or internal tools, RAGFlow helps you harness the power of generative AI with full control over your data and workflows.